Did You Know?

The basic principles of A/B testing were established in the 1920s through agricultural testing.

In the world of digital marketing, A/B testing is an important tool to ensure you are continually improving on the results you have achieved. Whether you’re testing different Google Ads variants, trialling a new email layout or simply deciding which Call To Action is best for the button on your landing page, it’s important to keep producing new iterations to evolve your campaigns.

The idea of an A/B test is simple in theory – you pit two different versions of something against each other and see which one performs best. However, there are a few golden rules that you should follow when running tests like this to ensure you get the most out of your experiments. After all, A/B testing can be quite time-consuming, so it’s important that you can be confident in your results.

Choose The Right Goal

Depending on what it is you are testing, you will need to define what success will look like. Which data is going to indicate a positive outcome? Generally, you know that you will be implementing the version of your content that performs best, but how will you decide this?

Different pieces of content will need different approaches. For example, if you are testing two iterations of a product page then the winner will be the one that drives the most sales. Therefore, you should look at things like the number of Conversions and Conversion Rate.

On the other hand, if you plan to test two variants of a Facebook ad, the Conversion Rate will be contingent on the landing page, so the ad creative’s success will be better highlighted by things like Click Through Rate and Cost Per Click.

Whatever it is, be sure to decide on the goal(s) before you run the test and always have it in mind when you’re evaluating the results.

Test One Thing At A Time

With A/B testing usually being quite a slow process, combining more than one test and changing multiple things between different versions can be tempting. However, doing this will seriously muddy the water and leave you questioning exactly what made option A perform better than option B.

The best approach is to lay out everything you want to test and order them by priority. Then, starting with the most important, test one thing at a time before moving on to the next one. That way you can be 100% sure that the aspect you changed was the reason for the increase in performance.

Ensure The Test Runs For Long Enough

Just how long should an A/B test last? The fact is that there is no one answer to this question that will be true in every scenario. The length of the test will ultimately depend on your goals and the rate at which you can achieve the events you’re looking to maximise.

For example, if the test is to see which CTA button works best on an email, as long as you have a big enough list to send it to, you will likely have an answer after just one send. However, for actions that require a little more investment on the user’s part, such as purchasing an item from a product page, it will take longer to gain enough data for a reliable result.

In general, it’s best to run most A/B tests for between two and six weeks. However, you should never be afraid to extend this if you feel more data is needed or even run the whole test for a second time if you feel the results were not conclusive enough.

Examples Of Recent Tests At Reach Digital

Google Ads Campaign Type Test

When Google introduced Performance Max campaigns in 2021, they were hailed as an all-singing, all-dancing option to cover all possible bases within Google Ads. However, with less control given to the user and fewer data points offered in the results, it’s not a given that this is the best type of campaign for all clients.

With this in mind, we have recently run tests between standard Search campaigns and Performance Max campaigns to see which brings the best results. For e-commerce websites, it’s also a good idea to test between Shopping campaigns and Performance Max. Our test was quite close, which led to it being extended, but ultimately showed that PMax was the best choice this time.

Landing Page Test

Landing page tests are one of the things we experiment with most often. This is usually because there are so many elements to consider and creating the right combination can make a big difference to Conversion Rate.

For the most recent test, we wanted to see the effect putting the contact form higher up the page would have on the number of leads. We launched an experiment within Google Ads and had 50% of the traffic go to the original page and 50% go to the new version. In this instance, after a month of testing, the variant with the form nearer the top won out.

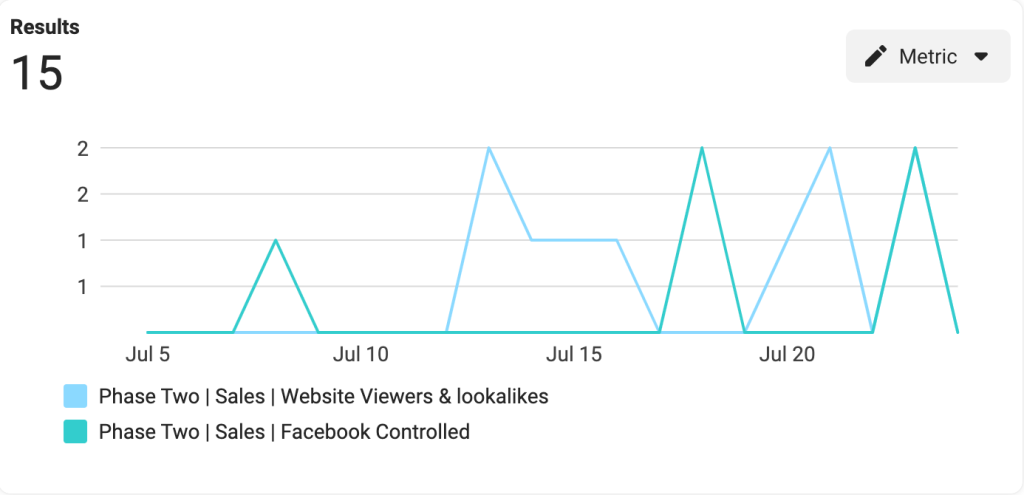

Meta Audience Test

When running Meta Ad campaigns, there are many different audience options to scrutinise. More and more, Meta is pushing advertisers to choose its Advantage+ set-up options, but it’s important to not just take it for granted that this will be the best choice.

Advantage+ allows Meta to decide the audience targeting itself, using machine learning to pinpoint users likely to respond to your ad the best. However, various other audience types may bring about better results. For new campaigns/accounts, we always like to test the Advantage+ audience against an interest-based alternative and an audience created using a combination of customer data and lookalikes. In this particular test, we ran the latter against a Facebook-controlled audience for three weeks and established that customer data was a better targeting option this time.

The world of A/B testing is a vast one and every situation requires its own set of considerations and success indicators. However, if you remember the golden rules above and take a flexible approach each time, the learnings you’ll establish will have a powerful effect on your digital marketing.

About the author

Chris Mayhew has great experience in a wide range of digital marketing practices, focussing predominantly on paid media. By implementing iterative A/B tests, he optimises Google and Meta Ad campaigns to maximise results